Ext JS Testing Story: Under the Hood

One of the key differences of Ext JS compared to other solutions has always been the emphasis placed on code quality. It is often said that any software can be improved; in our experience it is more important to ensure that the software stays improved. Bugs happen, bits rot, changes creep in and people come and go; all these factors contribute to quality decline as time goes on. This is why we consider automated testing the cornerstone for continuous quality assurance and improvement.

Overview

Testing Web applications is a broad topic in itself; testing the framework that applications are built with is an entirely different story altogether. Imagine testing a framework consisting of 1,500,000 lines of JavaScript and SASS code, running on 15+ browser platforms and trusted by 10,000 companies worldwide. How does one approach that?

Of course by dividing the whole into smaller, more manageable parts; in our case this means using different tools and approaches at different stages of the development process. Some of you have had a chance to meet Sencha Test, the solution that powers our integration and end-to-end testing. Ext JS has many customer visible examples and demo applications that can be verified with Sencha Test; however, it is well known that integration and end to end testing cannot be used as the only QA solution. Our answer to this problem is emphasis put on unit and functional testing.

Unit testing story

Most developers we know (including us!) dislike unit testing, considering it more of a necessary evil than something we enjoy doing. Writing tests is tedious, running them is slow, and results reported back from CI can be hard to reproduce. Sounds familiar?

While the tedium of writing tests can’t be helped much, the other things can be improved. Let’s consider unit testing our 2,000+ classes as an engineering challenge. The requirements are very contradicting:

- Test coverage needs to be thorough to ensure code quality, and this means a lot of test cases

- Tests need to run frequently to catch issues before they get into main branch

- The test suite needs to run as fast as possible to avoid hindering developer productivity

- And finally, test results should be easily reproducible in a local environment without requiring complex setup

So how do we manage to run our 70,000 unit test cases across 15 browser platforms, do this on each commit pushed to every pull request, and collect 500,000 test results in under 15 minutes? Enter Jazzman, our virtuoso test player.

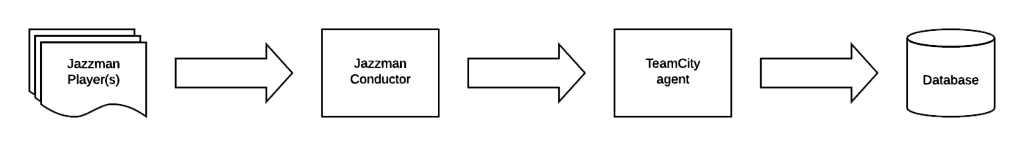

Started as a fork of the well known Jasmine test framework, it evolved over the years to become something much bigger, much faster, and much more capable: a powerhouse of Quality Assurance that the Ext JS framework is depending upon in conjunction with Sencha Test. It consists of 3 major parts:

Jazzman Player

This is the part that we call Jasmine on steroids. Besides being simply profiled and optimized back and forth for CPU consumption and Garbage Collection load, it also runs tests with more flair than its ancestor:

- It can execute individual test suites and specs, so that the developer can focus only on the tests needed to work on the issue at hand;

- It supports test suite dependencies, so that only the classes used in the test suite are loaded before tests are executed;

- It is aware of CI environment, where certain optimizations can significantly improve test run time without compromising test quality;

- It groks focus management, keyboard navigation, and many other things necessary to ensure the framework accessibility goals are met and maintained;

- It can get out of the way for CPU and memory profiling sessions that allows us to improve the framework while making sure it works correctly before and after optimizations;

- And many many more.

Last but not least, simply running test specs and collecting results is not enough for Jazzman: it also performs code quality and resource leak checks after each spec has finished. If there is a global variable created where it should not; a component created and not destroyed; a DOM node attached to the document body and not cleaned up; an animation started and not finished; a timer set and not cleared; an Ajax request is bypassing mock handlers; or even an already destroyed object is being reused — any of this fails the spec automatically.

The importance of this mechanism cannot be overstated. It took us several months of hard work before Ext JS 6.0 release to incorporate resource leak checking in the framework development workflow, and simply working on it allowed us to proactively fix hundreds of potential issues that will never reach your code!

Jazzman Reporter

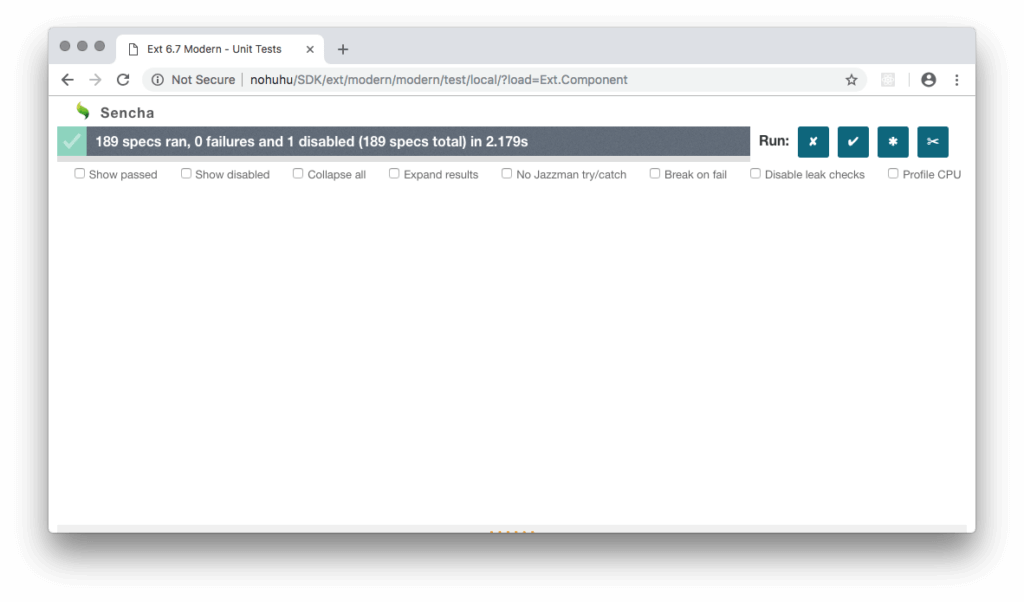

Jazzman Reporter is the web application interface for the Player:

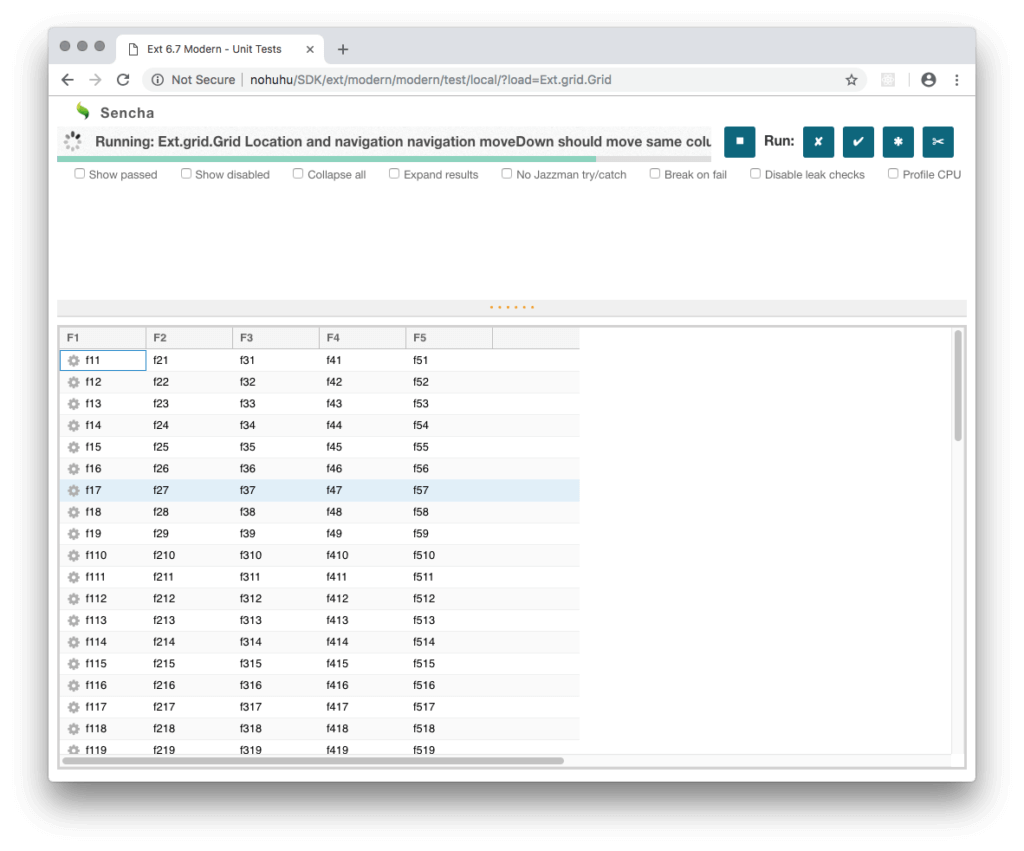

It is small, lightweight, and is deliberately built in vanilla HTML, CSS, and JavaScript without using any frameworks, to cut down on dependencies and potential issues. The Player and its tests are sandboxed in an iframe element, like this:

This approach allowed us to work around differences between local and CI deployment, and to abstract the reporting code and keep it outside of the Player. The end result is almost 100% reproducibility of test results between CI environment and developer’s local machine, which is very helpful for quick turnaround on issues.

Although when running locally our Reporter is just a robust support for the Player, it is the CI environment where it gets to shine. There, running in a browser requested from a cloud provider, it follows the lead of the Conductor and tunes its Player to perform in unison with the Big Band.

Jazzman Conductor

At the heart of every performance, Conductor orchestrates individual Jazzmen to smoothly play in parallel, collects the results and prints them back for TeamCity agent consumption. Browser instances are requested from a cloud provider (we use SauceLabs), and controlled via WebDriver protocol. Unlike other solutions based on WebDriver, our tests are not explicitly controlled from the orchestrating side; tests run independently in each browser just like in local environment but results are also reported to the Conductor. This allows for very fast test execution at the cost of having to deal with significant stream of test results to collect and process.

In fact even the volume of data to and from test subject browsers has become a serious problem at our scale. With test suites split in “chunks” for parallel processing, and 50 browser sessions running simultaneously, we see 4-6 GB of static assets being downloaded by test subject browsers per each test suite run, and hundreds of MB in generated test results.

Modern browsers run fast and produce thousands of test results in a short time; this data is sent to Conductor at unpredictable intervals depending on available browser CPU allocation, network latency, and other factors. Conductor needs to process this data, format outgoing messages and print them on standard output stream, which in turn is consumed by TeamCity agent over a pipe.

The whole exercise then becomes a fine balancing act between reading from the network, processing results, and blocking on the pipe write. If we don’t read from the network fast enough the test subject browsers will have to hold too much test data and may crash. If we read fast but can’t process messages fast enough then the Conductor process will consume a significant amount of RAM and garbage collecting will take more CPU time, with less frequent feedback and increased potential for stalling browser test subjects. If we can read and process messages but cannot write to output stream fast enough, the problem is compounded by having even more RAM consumed with longer GC scan delays.

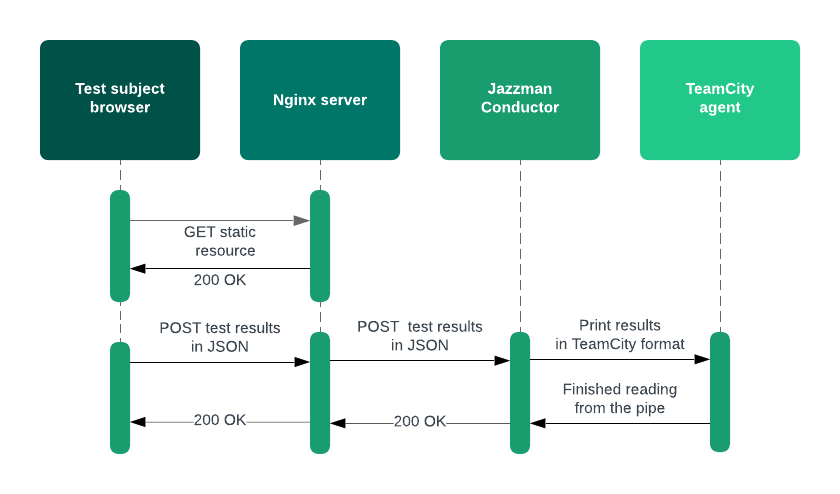

To solve these problems, Jazzman Player reports test results by making an asynchronous POST request and pausing block queue until it receives OK response from the Conductor. In its turn Conductor, which is an event loop based Node.js application, receives test result requests, formats results, and prints them to standard output stream also in asynchronous fashion. When the messages from a given Player are all printed, Conductor will respond with 200 OK and Player will proceed with further tests.

This implementation of test flow control makes the whole system self-adjusting, efficient, and scalable: at present we use 50 parallel sessions for Jazzman Player, with Conductor CPU load being negligible; especially so when fronted with Nginx HTTP server to make serving static files as quick and efficient as possible.

Conclusion

In this article we tried to shed some light on the Quality Assurance processes used here at Sencha. While Sencha Test indeed is the most comprehensive solution for testing Ext JS applications, Ext JS is a really big framework that uses different approaches to automated testing at different stages of the development process. We remain committed to maintaining high quality across all of our products, and we work continuously to improve them and the tooling that builds our success. We also love to hear feedback from our customers, and this is one of the reasons we decided to release Jazzman under an open source license. Jazzman is available in this Github repository, and it is the same source that we use internally for the Ext JS framework (a Git subtree in fact). Feel free to fork it, play with it, and let us know what you think!

Be on the lookout for more from Sencha as we move to further embrace open source, involve our customers and grow the Ext JS fanbase!

By 2026, Gartner says 80% of apps will be built using low code tools. That’s…

Did you know that nearly 90% of startups fail? And one of the biggest reasons…

The Sencha team is excited to announce the latest Sencha Rapid Ext JS 1.1.1 release…